Last Updated on May 15, 2025 by DarkNet

Last month, TikTok feeds erupted with whimsical, pastel‑toned selfies—ordinary users reimagined as Ghibli protagonists—propelled by a filter that racked up 50 million downloads in a weekend. The craze spotlights a new breed of AI generators that mimic Ghibli’s hand‑drawn warmth, flooding Instagram, X, and Reddit with enchanted avatars. Yet behind the charm lies a debate: Is this a democratized renaissance of visual storytelling or a storm of copyright infringement, privacy leaks, and algorithmic bias? In the pages ahead, we unpack how the technology works, where it sources its training data, and why lawyers, artists, and investors are watching closely. We’ll dissect legal precedents, track the cash behind portrait apps, and end with guidelines for creating and sharing responsibly.

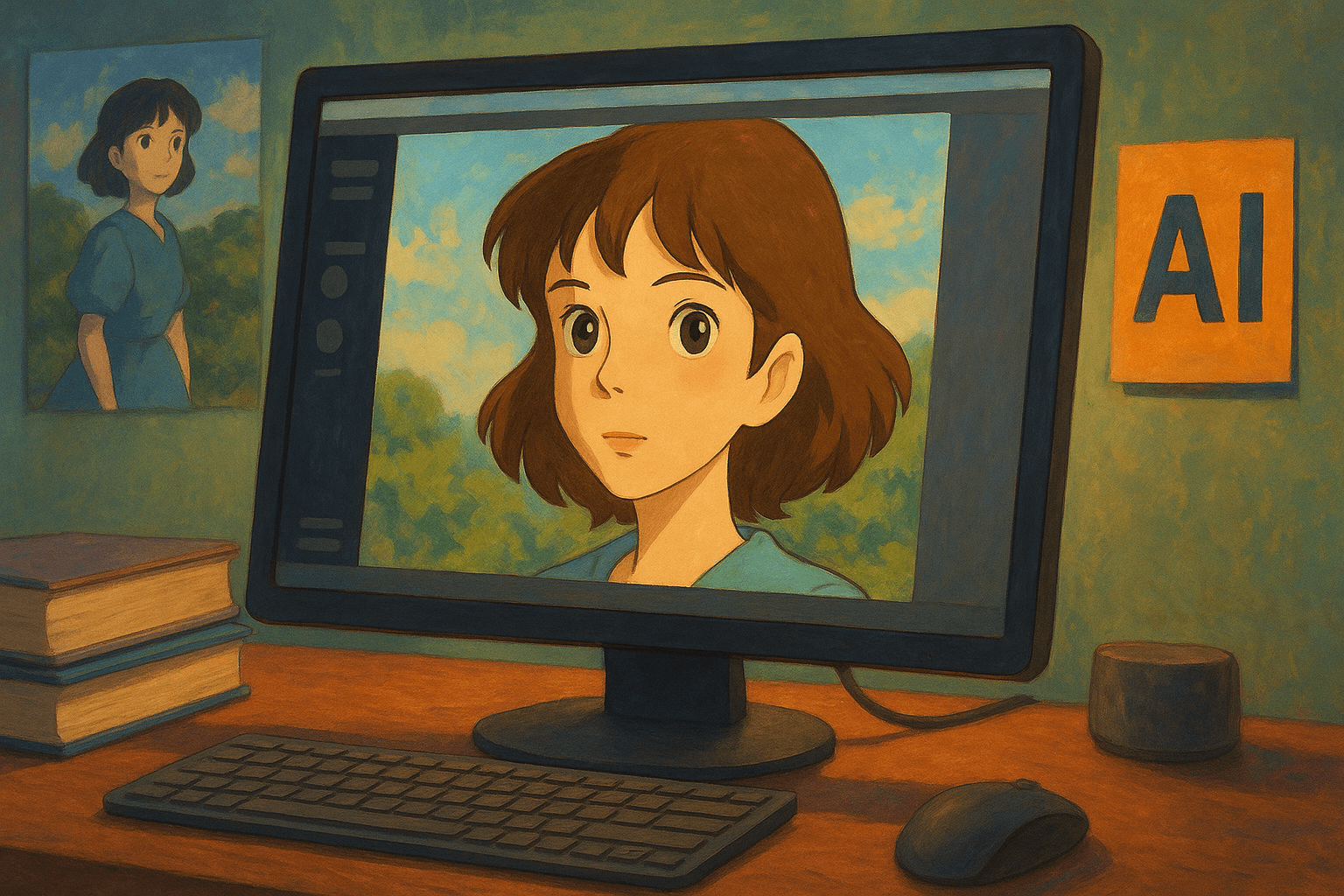

The Surge of Ghibli‑Inspired AI Art

What began as a niche experiment in late 2024 has exploded into a full‑blown phenomenon. On TikTok, the hashtag #Ghibli now tops 456 K posts, while #StudioGhibli exceeds 599 K, fueling millions of video views each week. Instagram mirrors the momentum: the umbrella tag #Ghibli has amassed more than 2.6 million posts, with engagement climbing roughly 30 posts per hour. A single X (formerly Twitter) post by software engineer Grant Slatton—showing his family “Ghiblified” by ChatGPT‑4o—garnered 42 K likes and nearly 27 million views in two days, igniting the viral wave.

The appeal cuts across generations and geographies. Gen Z creators in Jakarta remix their selfies with Totoro‑esque backdrops, while millennials in São Paulo “Ghiblify” wedding photos; even corporate brands in New York have adopted the style for promotional campaigns. Studio Ghibli’s universal themes—nostalgia, environmental wonder, gentle whimsy—translate seamlessly across cultures, making its visual language a ready canvas for global self‑expression.

Driving the trend are next‑gen diffusion models such as Midjourney v6, Stable Diffusion XL, and OpenAI’s GPT‑4o image engine. These systems can ingest a plain selfie and output hand‑drawn‑looking frames with signature watercolor skies and wide‑eyed characters in seconds. The democratization thrills hobbyists and marketers alike, but it also strains infrastructure—OpenAI temporarily capped image generation after CEO Sam Altman warned that demand was “melting GPUs.”

Unsurprisingly, the surge has sparked heated debates. Artists laud the fresh storytelling palette, while legal scholars question whether training on copyrighted frames crosses fair‑use lines. As AI continues to blur homage and imitation, the Ghibli craze stands as both a celebration of creativity and a bellwether for the cultural and ethical battles ahead.

How the Magic Happens: Inside the Diffusion Models

Diffusion models are high‑tech sculptors that begin with a block of visual “noise” and gradually chisel it into a picture. During training, the network does the reverse: it adds random grain to millions of images until they dissolve into static, then learns how to subtract that noise step by step. At generation time, you supply a text prompt—“portrait in the style of Spirited Away”—and the model removes noise across roughly 20–40 iterations until a coherent, Ghibli‑flavored scene emerges.

Two ingredients give the output its signature charm. First is text‑to‑image conditioning: the system links words like “pastel sky” or “soft watercolor shading” to visual patterns it memorized. Second is style transfer via fine‑tuning: artists retrain the last layers on a small set of Ghibli frames or fan art, nudging the baseline model toward wide‑eyed faces and muted palettes.

Open‑source engines such as Stable Diffusion XL excel at customization. Users can run them locally, swap checkpoints, and edit the code, but they demand technical know‑how and offer fewer guardrails. Proprietary platforms like DALL·E 3 or Midjourney v6 deliver turnkey quality and strict moderation, yet hide their weights and limit fine‑tuning, frustrating power users.

Training data ultimately shapes the magic—and the controversy. Models soaked in copyrighted film stills reproduce Ghibli motifs with uncanny fidelity, unlocking fresh creative avenues while inviting lawsuits over unauthorized use. Whether inspiration outweighs infringement hinges on how, and if, those images are legally licensed.

Copyright, Trademarks, and the Right of Publicity

In the United States, copyright protects “original works of authorship,” including the backgrounds, character designs, and color palettes that define Studio Ghibli films. When an AI model outputs an image “substantially similar” to protected elements, it may be deemed a derivative work. Because machines cannot hold copyright, the user or developer who directed the generation could face infringement claims if no license exists. The Supreme Court’s 2023 decision in Andy Warhol Foundation v. Goldsmith narrowed fair‑use defenses, signaling that stylistic mimicry alone may not qualify as transformative.

Trademark law adds another layer. Totoro’s silhouette and the Studio Ghibli word mark are registered identifiers of source. Using them—or close look‑alikes—in AI portraits can trigger consumer‑confusion or dilution claims under the Lanham Act. The Rogers v. Grimaldi test offers some leeway for expressive works, but commercial uses such as profile‑picture generators or branded merchandise are less likely to pass.

Right‑of‑publicity statutes, which vary by state, protect a person’s name, likeness, and persona. If an AI model blends Ghibli traits with a recognizable celebrity face, it could violate publicity rights, as seen in disputes like White v. Samsung and Bored Ape‑related lawsuits.

Liability flows downstream. Users who share infringing portraits can receive takedown notices; platforms may lose safe‑harbor protections if they ignore repeat offenders; and model providers risk contributory infringement if their training data knowingly incorporates unlicensed frames. Clear licensing agreements, robust content filters, and transparent provenance logs are emerging as best practices to navigate this legal minefield.

Biometric Data & Privacy Laws

Every Ghibli‑style selfie begins with a face scan, and that scan is biometric data—precise measurements of the eyes, nose, and jaw that cannot be changed like a password. When apps store these vectors, they create a permanent identifier that bad actors could hijack for deepfakes, mass surveillance, or identity theft. Even “anonymized” embeddings can sometimes be reverse‑engineered, raising alarms among privacy advocates.

In the United States, the California Consumer Privacy Act (CCPA) grants residents the right to know, delete, and opt out of the sale of their biometric information, while Illinois’s Biometric Information Privacy Act (BIPA) goes further by requiring written consent before collection and imposing statutory damages of up to $5,000 per violation. High‑profile settlements with Facebook and Clearview AI show how costly missteps can be.

Internationally, the EU’s General Data Protection Regulation (GDPR) classifies biometric data as “special‑category” information, demanding explicit consent, data‑minimization, and clear retention limits. Japan’s Act on the Protection of Personal Information (APPI) adopts a similar stance, obligating firms to specify usage purposes and secure cross‑border transfers.

The legal stakes are multifaceted. Users risk unauthorized profile cloning or unwitting enrollment in face‑recognition databases. Platforms may lose safe‑harbor protections and face class actions if consent flows or deletion protocols fall short. AI developers could incur joint liability for training on scraped selfies without verified permissions, especially once the forthcoming EU AI Act mandates provenance audits. Encrypting embeddings, limiting retention windows, and offering transparent opt‑in dialogs are emerging best practices to balance creative fun with privacy compliance.

Creative Economy: Who Wins, Who Loses?

The Ghibli‑inspired AI boom is reshaping the livelihoods of artists faster than any single stylistic trend in recent memory. Freelance illustrators who once charged $200‑$500 for hand‑drawn character portraits report a 30 percent dip in commission inquiries on platforms like Fiverr and DeviantArt, as clients opt for $9 “Ghiblify” apps that deliver instant gratification. Mid‑tier studios also feel the squeeze: one Los Angeles animation boutique told Variety that half of its concept‑art bids were undercut by AI vendors in Q1 2025.

Yet the same technology opens new doors for small creators. Etsy shop owners are selling personalized AI prints at scale, bundling them with physical frames and exceeding the margins of traditional digital downloads. Indie game developers, once priced out of bespoke art, can now prototype worlds with Ghibli‑esque assets and redirect budgets toward narrative design.

Pricing dynamics are shifting accordingly. The floor for quick‑turnaround character art has collapsed, but premium, human‑crafted illustrations that emphasize originality and client collaboration are commanding higher rates as a luxury good. Market saturation, however, threatens long‑tail creators: with thousands of near‑identical AI portraits flooding Instagram, discoverability drops and advertising costs rise.

Intellectual‑property frictions further complicate the ledger. Sellers risk takedowns if their AI outputs echo copyrighted Ghibli elements too closely, while buyers face uncertainty over commercial usage rights. In sum, AI democratizes access to whimsical visuals and spawns fresh entrepreneurial niches, but it also erodes certain revenue streams and introduces legal gray zones—creating clear winners, clear losers, and a vast middle ground in flux.

Social & Psychological Ripple Effects

The pastel glow of a Ghibli filter can feel like instant nostalgia, but the emotional trade‑offs run deeper than a fleeting burst of likes. Psychologists note that AI‑perfected portraits reinforce “idealized cuteness”—big eyes, flawless skin, delicate color palettes—that rarely match real‑world faces. For teens already navigating body‑image pressures, repeatedly posting stylized avatars may widen the gap between self‑perception and reality, elevating risks of anxiety and dysmorphia. A 2024 Pew study found that 38 percent of Gen Z users felt “less attractive” after comparing their selfies to AI‑enhanced versions shared by peers.

Authenticity norms are also shifting. When every profile photo looks like a frame from My Neighbor Totoro, the line between genuine self‑expression and algorithmic conformity blurs. Early ethnographic research from the University of Michigan suggests that frequent filter users are more likely to equate creativity with prompt‑crafting skills rather than traditional artistry, potentially redefining what society deems “creative.” Conversely, some communities harness the style for positive identity play—neurodivergent users on TikTok report that Ghibli avatars help them visualize emotions they struggle to express in words.

At a cultural level, mass adoption could standardize a single, whimsical aesthetic, crowding out diverse visual traditions. Critics warn of “soft cultural homogenization,” where global feeds recycle the same motifs, eroding local art forms. Yet proponents argue that the trend democratizes storytelling, giving anyone the tools to craft cinematic personas.

Ultimately, the psychological impact hinges on digital literacy. Clear labeling, mindful usage, and balanced media diets will be crucial in ensuring that the magic of Ghibli‑style AI uplifts rather than undermines mental well‑being.

Comparison Corner: FaceApp, Lensa, and Beyond

FaceApp, Lensa, and the new wave of Ghibli‑style generators all promise Hollywood‑grade makeovers, but their underlying philosophies—and risk profiles—differ sharply. FaceApp, launched in 2017, pioneered cloud‑based facial retouching with one‑tap aging and gender‑swap filters. The app is freemium: casual users view ads while power users pay $39.99 per year for unlimited edits. Critics blasted its early policy allowing “perpetual, irrevocable” rights to uploaded images; the company now pledges 48‑hour deletion, yet stores metadata for analytics.

Lensa, by Prisma Labs, shot to No. 1 on the App Store in 2022 with its “Magic Avatars” feature powered by Stable Diffusion. Users pay a flat $7.99–$17.99 per avatar pack, generating 50–200 portraits in various art styles. Lensa claims it erases raw photos after processing and lets subscribers opt out of future model training, but class‑action lawsuits allege it still exploits copyrighted art scraped from the web.

Ghibli‑style generators—a mix of open‑source notebooks and niche mobile apps—operate on thinner margins. Many are donation‑based or charge micro‑fees per render, relying on community‑trained checkpoints. Their interfaces skew toward advanced sliders and prompt boxes, rewarding users who understand concepts like CFG scale and negative prompts. Because most run on public diffusion weights, they offer minimal content moderation and place legal liability squarely on the user.

In short, mainstream apps trade simplicity and polish for deeper data collection and stricter paywalls, while indie tools deliver customization at the cost of legal ambiguity and a steeper learning curve. For creators and consumers alike, choosing a platform now means balancing convenience, privacy, and artistic control.

Risk‑Mitigation Toolkit for Users & Developers

From prompt to post, every step of Ghibli‑style generation can be hardened against legal and privacy pitfalls. Begin with responsible sourcing: train or fine‑tune models only on images that are public‑domain, licensed, or self‑created. Developers should embed automatic citation tags or invisible watermarks (e.g., Stable Signature, Digimarc) so ownership can be traced if content is scraped.

For end users, the safest route is local or offline inference on consumer GPUs via Stable Diffusion WebUI or InvokeAI. Running models locally keeps biometric embeddings off external servers and enables noise‑injection or style‑mix layers that reduce one‑to‑one similarity with protected frames. If cloud apps are unavoidable, read privacy policies for retention periods, model‑training opt‑outs, and data‑deletion workflows before uploading selfies.

Both creators and platforms should employ hash‑matching and perceptual‑fingerprinting tools such as Google’s Content Safety API or the open‑source Traceroute‑AI to detect unauthorized reposts. GitHub projects like ClipRepro and Laion‑Watermark‑Detector can scan datasets for copyrighted material before training commences.

To guard against model leakage, maintain versioned model cards that document data sources, licensing, and safety filters. Incorporate rate limits and prompt auditing to deter mass scraping or malicious deepfakes. When distributing checkpoints, add ethical‑use licenses (e.g., RAIL) that explicitly forbid commercial exploitation of infringing outputs.

Finally, cultivate a feedback loop: publish transparency reports, honor DMCA takedowns swiftly, and encourage community bug‑bounty programs focused on privacy flaws. A layered approach—technical safeguards, clear governance, and informed user behavior—keeps the wonder of Ghibli‑inspired AI art within legal and ethical bounds.

The Regulatory Horizon

Legislators worldwide are racing to catch up with the creative and legal turbulence unleashed by generative AI. In Brussels, negotiators reached provisional agreement on the EU AI Act in late 2024, classifying generative systems as “high‑risk” when they manipulate biometric data or replicate copyrighted material. Once enacted, providers must publish detailed model cards, disclose training datasets, and build opt‑out mechanisms for rights holders, with fines of up to €35 million or 7 percent of global turnover for non‑compliance.

Across the Atlantic, Congress is debating a trio of deepfake‑focused bills. The NO FAKES Act would grant performers a federal right of publicity, while the DEEPFAKES Accountability Act mandates digital watermarks and origin disclosures on synthetic media. Several states are moving faster: Illinois amended BIPA to cover AI avatars, and California’s AB 602 imposes civil liability for election‑related deepfakes posted within 60 days of voting.

Asia is forging its own path. Japan’s Ministry of Economy, Trade and Industry (METI) issued Generative AI Guidelines in early 2025, urging platforms to verify licenses for copyrighted inputs and recommending “privacy impact assessments” when face data are processed. China’s Cyberspace Administration already requires a conspicuous “AI‑generated” label on synthetic images and reserves the right to audit model weights.

Collectively, these measures target three flashpoints: copyright infringement, biometric misuse, and broader ethical harms such as disinformation. Compliance costs will rise—especially for open‑source projects—but so will market clarity. Developers that invest early in provenance tracking and rights management may gain a competitive edge, while users can expect clearer consent flows and safer creative playgrounds as the regulatory fog lifts.

Comparative Analysis Table

|

Platform |

Data Handling Practices |

Monetization Model |

User Interface Design |

Legal Controversies |

Copyright Issues |

|---|---|---|---|---|---|

|

FaceApp |

Photos processed in the cloud and cached for 24–48 hours before deletion; metadata stripped and encrypted during transit |

Freemium (ads) with a $39.99/year “Pro” tier |

One‑tap, mobile‑first filters; minimal user input |

Recurrent privacy criticism over broad license language and Russian‑hosted servers |

Low—filters remix user‑supplied photos rather than third‑party IP |

|

Lensa |

Requires 8–20 selfies; stores facial geometry for model training; retention period not disclosed |

Pay‑per‑pack avatars ($3.99–$7.99) plus optional subscription |

Guided wizard with preset styles; limited fine‑tuning |

Illinois BIPA class action over biometric capture; concerns about sexualized outputs |

Uses Stable Diffusion weights trained on scraped art, prompting artist copyright claims |

|

Ghibli‑Style Generators |

Varies: open‑source notebooks keep data local; cloud sites log analytics and payments via third parties |

Donationware or micro‑fees per render; some offer unlimited tiers |

Power‑user dashboards with prompt boxes, CFG sliders, model‑swap options |

Sparse moderation and disclaimers; liability shifted to users |

High risk of derivative works that closely mimic Studio Ghibli frames, raising infringement concerns |

Analysis

A side‑by‑side view reveals stark trade‑offs. FaceApp prioritizes ease and speed, but its cloud‑first workflow still alarms privacy watchdogs despite the 48‑hour deletion window. Lensa monetizes novelty through pay‑per‑pack avatars, yet its biometric retention and pending BIPA lawsuit highlight how quickly AI aesthetics can collide with state law. Ghibli‑style generators occupy a different niche: they cater to hobbyists who crave deep control, but their fragmented ecosystem offers scant legal or technical safeguards. For users, choosing a platform now means balancing convenience against data exposure and potential IP liability. Creators and developers, meanwhile, must weigh revenue models against the compliance costs of stricter privacy statutes and emerging copyright scrutiny. Together, these contrasts illustrate the broader tension in generative art: democratization of powerful tools on one hand, and an expanding minefield of legal, ethical, and economic risks on the other.

Quick Tips for Secure, Ghibli‑Style Creativity

- Check the fine print: Read the platform’s privacy policy for data‑retention windows, biometric consent clauses, and opt‑out options before uploading any selfies.

- Opt for local generation: Whenever possible, use offline tools like Stable Diffusion WebUI to keep facial embeddings on your own device.

- Watermark wisely: Add a subtle signature or invisible watermark (e.g., Digimarc, Stable Signature) to help trace unauthorized reposts.

- Use throwaway photos: If cloud processing is unavoidable, upload low‑resolution or masked images to limit the value of stolen data.

- Secure your exports: Strip metadata from downloaded images and store originals in encrypted folders.

- Mind the prompts: Avoid entering personal identifiers or proprietary content that could leak in future model updates.

- Monitor reuse: Set up Google Reverse Image or ClipRepro alerts to catch unauthorized distribution early.

- Stay updated: Follow regulatory changes (CCPA, GDPR, BIPA) and platform policy updates to ensure ongoing compliance.

Conclusion

Ghibli‑style AI portraits capture the wonder of hand‑drawn animation and place it at everyone’s fingertips, but they also surface hard questions about copyright, privacy, and mental well‑being. As diffusion models democratize visual storytelling, users, developers, and lawmakers must navigate a maze of legal gray zones, biometric risks, and shifting creative economies. The path forward lies in informed, responsible experimentation—licensing data, safeguarding faces, and demanding transparency. Embrace the magic, but help shape the rules, so tomorrow’s innovations uplift artists, audiences, and the law alike.